Projects

- Propose a heterogeneity-aware distributed machine learning system

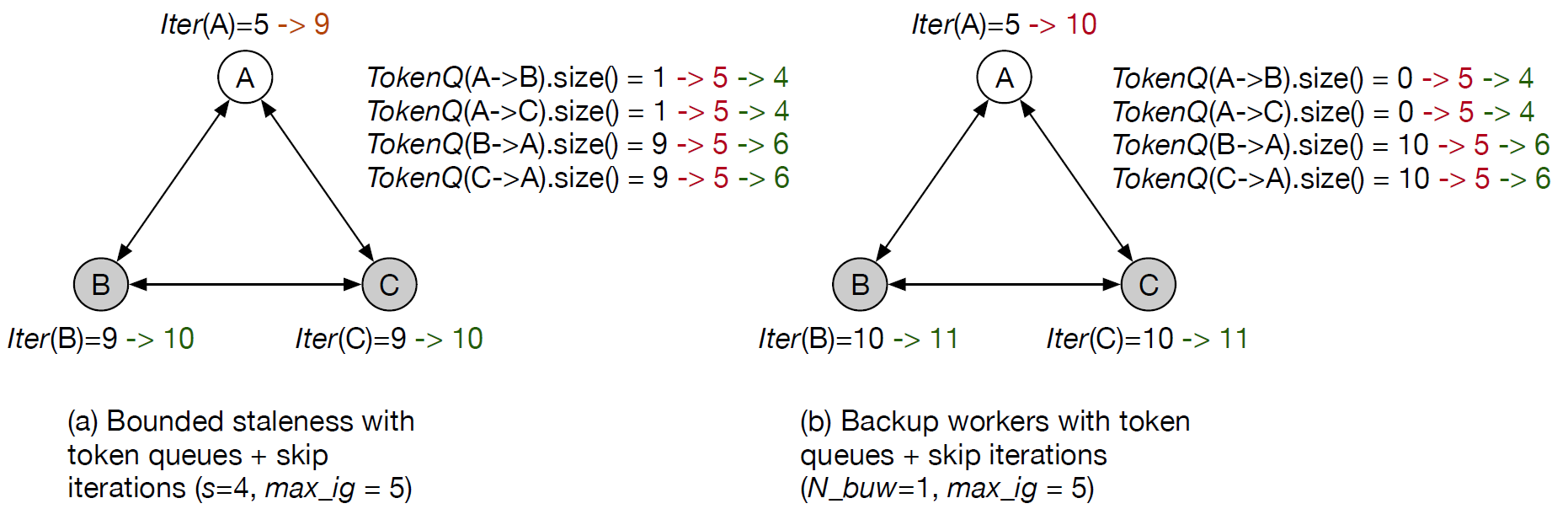

We propose the first heterogeneity-aware decentralized training protocol. Based on an unique characteristic of decentralized training that we have identified, the iteration gap, we propose a queue-based synchronization mechanism that can efficiently implement backup workers and bounded staleness in the decentralized setting. To cope with deterministic slowdown, we propose skipping iterations so that the effect of slower workers is further mitigated. We have built a prototype implementation on TensorFlow. The experiment results on CNN and SVM show significant speedup over standard decentralized training in heterogeneous settings.

The figure above gives two examples of skipping iterations as well as the use of token queues, which is our mechanism to bound the iteration gap in order to ensure correct execution of the distributed training algorithm. Worker A is a straggler. Changes in red indicate the skip, while changes in green indicate the new progress enabled by the skip action.

Publication: ASPLOS 2019. - Work with senior students on parallel Finite State Machines (FSM) with Convergence Set Enumeration

We develop a new computation primitive, state set to state set mapping, for FSM that can efficiently support enumerative FSM. We propose a convergence set prediction algorithm as well as re-execution mechanisms for occasional incorrect predictions. Our algorithm significantly outperforms Lookback Enumeration (LBE) and Parallel Automate Processor (PAP).

Publication: MICRO 2018.

- Work with senior students on mining big performance data from hardware counters

We propose rigorous data mining methodology to make sense of performance data using data cleaning, event ranking, pruning and interaction. Our methods exhibit significant error reduction and capability of identifying important configuration parameters.

Publication: MICRO 2018.

- Apply transfer learning to sincerity and deception prediction

We apply transfer learning to human behavioral trait modeling. We propose a stacked generalization framework for predicting sincerity and deception, using GFK transformation to ameliorate differences between in-domain and out-of-domain data characteristics. Our model achieves significantly better results than baseline models trained using in-domain data only.

Publication: Interspeech 2017.